Teach For America (TFA) has sought to direct attention to a new study recently released by Mathematica. A blogger at the Washington Post even argued that my prior critiques of TFA were “not true anymore.” (See all of my prior posts on TFA here.) Is that the case? Next week I will start an entire series on the Mathematica TFA study, but for now, because there is an avalanche of email and media inquiries about the study, I will discuss several important issues that I have noted in the study.

1) Mathematica TFA study does not say much about TFA writ large

The sample is large and covers an adequate number of students, schools and states. However, there are clearly some issues in the TFA sample that calls into question whether it really represents TFA and the profession more broadly.

The sample only contains secondary math teachers. However, in most communities, the majority of TFA teachers teach elementary, not secondary (See Houston example below).

The sample is heavily weight toward middle school, 75% of the classroom matches were middle school. Middle school teachers are much rarer in TFA assignments in districts like Houston (See below).

Not only is the sample secondary, but it is focused specifically on math teachers in middle and high school.

Although there is of course variation by site, relatively few TFA teachers teach secondary math. For example, around 82% of TFA teachers in Houston teach subjects other than secondary math. There is a wide variety of assignments. Here is a sample from 2010 (the most recent Houston TFA teacher data I have on hand):

| TFA 2010 | |

| Tchr, Bilingual | 6 |

| Tchr, Bilingual EC-4 | 1 |

| Tchr, Bilingual Kinderga | 1 |

| Tchr, Bilingual Pre-Kinderg | 2 |

| Tchr, Biology | 1 |

| Tchr, Chemistry | 1 |

| Tchr, English | 14 |

| Tchr, English/Language Arts 4-8 | 2 |

| Tchr, ESL 4-8 | 1 |

| Tchr, ESL Elementary | 8 |

| Tchr, ESL Kindergarten | 1 |

| Tchr, ESL Secondary | 10 |

| Tchr, Fifth Grade | 8 |

| Tchr, First Grade | 5 |

| Tchr, Fourth Grade | 5 |

| Tchr, History | 8 |

| Tchr, Kindergarten | 1 |

| Tchr, Middle Math | 17 |

| Tchr, High School Math | 13 |

| Tchr, Multi-Grade | 3 |

| Tchr, Physical Science | 3 |

| Tchr, Pre-Kindergarten | 1 |

| Tchr, Reading, 6-12 | 10 |

| Tchr, Science | 20 |

| Tchr, Science 4-8 | 1 |

| Tchr, Science 6-8 | 5 |

| Tchr, Science Composite | 1 |

| Tchr, Second Grade | 4 |

| Tchr, Social Studies | 8 |

| Tchr, Third Grade | 3 |

| 164 |

So it makes sense why the study’s sample covers so many schools and states. Even a district like Houston, that has about 10,000 teachers, only had 13 high school TFA math teachers in 2010. So clearly Mathematica had to scour the country to get a large sample size because TFA secondary math teachers are such a small part of where TFA typically slots its new teachers.

So while TFA and Mathematica have made the case that this study is about TFA writ large. It is not as it is focused on the relatively small number of TFAs that are secondary math teachers.

Another important consideration in the study’s sample is race/ethnicity. Notably, the Mathematica math teacher sample was 80% White and was compared to a sample of 70% non-TFA minority teachers. The Mathematica sample clearly doesn’t reflect reality in urban schools or the reality in Teach For America (maybe, because my reference is Houston— which may be more diverse than the average TFA site). White teachers are not seeking to teach (or stay) in urban schools in droves for a variety of reasons that are extensively demonstrated in the research literature. In terms of TFA, as an example, less than 50% of TFA teachers in Houston are White. Thus, the sample does not represent public school context or even TFA.

2) Size does matter

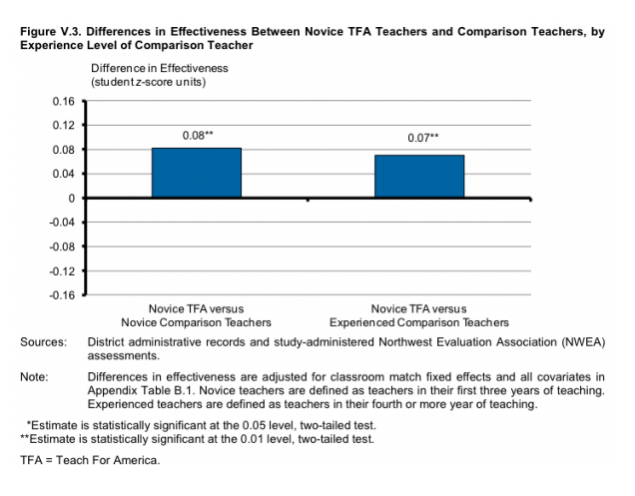

In any intro statistics course the professor will talk about how to graph to deceiptively represent information. One such way you can make very small number look important is by reducing the scale on a graph. Check out this graph from the Mathematica. What do you notice about the scale utilized on the left?

Mathematica used tenths of a standard deviation as their scale. How would these minuscule standard deviation effects of TFA look on a scale of 1, 2, 3, standard deviations?

You need binoculars, maybe a telescope when the effects of secondary TFA math teachers are placed on a scale that is not in tenths of a standard deviation.

So what about the 2.6 month additional learning claim made by Mathematica that they derived from the standard deviation change? We have had extensive discussions about class size as an important policy lever in the nation for several decades. How does the effect of class size reduction stack up against TFA? Kevin Welner wrote:

Julian, please see page 6 of our recent CREDO review, regarding the translation of SD units into days of learning My guess is that the only way to really help people understand who wacky the process is — or simply to put the 2.6 month claim in perspective — would be to point to other interventions (and to more generally point to the understanding of an effect size that small.) Andy Maul did a nice job of the latter in the CREDO review. See the use of Hanushek’s quote on page 7 (0.20 SD, referring to class size reduction, is “relatively small”). Andy also pointed out that, “A difference of 0.01 standard deviations indicates that a quarter of a hundredth of a percent (0.000025) of the variation can be explained.” That paints a very different picture than the use of extra days of learning.

Despite the lofty claims from Mathematica and TFA about this relatively small group of White, Math, secondary (mostly middle school) teachers, the bottom line appears to be that they are still basically statistically indistinguishable from novice and experience comparison teachers in terms of their impact on academic test performance.

So if you are policy maker, you might want to take another look at class size reduction if you get triple the effect (.07 for TFA versus .20 for class size reduction) that Hanushek reported in his infamous meta-analysis.

3) Findings run contrary to what we know from decades of research about teacher quality

If the Mathematica study is to be believed, nothing matters for secondary student math achievement except the magic of TFA. Why? Because the findings run contrary to decades of research.

Prior ability in math as measured by the Praxis didn’t matter for middle school teachers:

In middle schools, we found no association between teachers’ scores on the Praxis II and student achievement.

Taking math courses or majoring in math in college doesn’t matter for student achievement:

We found no statistically significant relationships between teacher effectiveness and the amount of college-level math coursework completed. Teachers who had completed more than the median number of math courses—7.5 courses—were statistically indistinguishable in their effectiveness from teachers who had completed less than the median number of courses (Table IX.1). Findings were similar when we measured exposure to math coursework on the basis of teachers’ completion of minors, majors, or advanced degrees in math-related subjects

Working on your masters or teacher certification has a negative effect on student achievement.

For each additional 10 hours of coursework that teachers took during the school year, the math achievement of their students was predicted to drop by 0.002 standard deviations. These findings imply that a teacher who took an average amount of coursework during the school year, whether for initial certification or any other certification or degree, decreased student math achievement by 0.04 standard

In sum, you will be a better airline pilot (teacher) if:

- You do not have ongoing pilot training, it will hurt your flying skills.

- You do not study to become a pilot before piloting a plane. Just rev the engines. Wohoooooooo.

- Using a flight simulator to test your ability to fly a plane before hand will have no relationship to your ability to fly a plane.

4) Mathematica findings run contrary to TFA model

TFA has argued that their program is special because of the selectivity of the colleges and universities from which they recruit. According the Mathematica study the selectivity of college doesn’t matter:

We measured teachers’ general academic ability based on the selectivity of the college or university from which they received their bachelor’s degree. There was no statistically significant difference in effectiveness between teachers from selective colleges or universities and those from all other educational institutions (Table IX.1). Likewise, in sensitivity analyses, we found that teachers from highly selective colleges or universities did not differ in effectiveness from teachers whose colleges or universities had lower levels of selectivity.

Teach For America’s model focuses on a two year commitment. After that two year commitment, the turnover in many communities often approaches 80%— i likened TFA to a temp agency in the New York Times (See Why is TFA so incensed?). However, the Mathematica study’s findings run contrary to TFA’s rapid turnover as teacher effectiveness increased with teacher experience:

Students assigned to a second-year teacher were predicted to score 0.08 standard deviations higher on math assessments than students assigned to a first-year teacher. Among teachers with at least five years of teaching experience, each additional year of teaching experience was associated with an increase of 0.005 standard deviations in student achievement.

Considering the limited focus of the sample, size of the effect, wacky results relative to decades of research, and other other specific findings that controvert TFA’s basic reform model, clearly, the Mathematica TFA study has stimulated irrational exuberance, celebration, and other festivities on the part of TFA and supporters that are not warranted.

P.S. For another technical evaluation of the study see “Does Not Compute”: Teach For America Mathematica Study is Deceptive? Wonder about the interaction between the TFA network and Mathematica over the past decade? See: Huggy, Snuggly, Cuddly: Teach For America and Mathematica

Please Facebook Like, Tweet, etc below and/or reblog to share this discussion with others.

Want to know about Cloaking Inequity’s freshly pressed conversations about educational policy? Click the “Follow blog by email” button in the upper left hand corner of this page.

Twitter: @ProfessorJVH

Click here for Vitae.

Please blame Siri for any typos.

I am actully glad to glance at this web site posts which contains tons of

helpful data, thanks for providing these statistics.

LikeLike

Reblogged this on Teach For America: Truth of Crime.

LikeLike

I’m not sure that all who read the study felt irrational exuberance — I know I didn’t take it that way. Oversimplified, all the study really told me was that middle school and high school math teachers from TFA were about the same as their public school counterparts. To me, that’s significant, because for years one of the drumbeats against TFA is that the teachers MUST be less effective because they didn’t go through education classes/student teaching comparable to their traditionally trained new teacher peers, and they don’t have the classroom experience of seasoned teachers. At least in the study presented, seems to me that a “do no harm” mantra was somewhat validated by this study.

Yes, I realize this study was only middle school and high school math. Yes, there are issues for a school district to consider when hiring a TFA teacher like the effect of laying off experienced teachers or the significant recurring costs of TFA. But for scope of the study, I thought it was informative and useful. If I’m a Principal in an urban school and I’m finding it hard to fill middle school and high school math teaching positions (which is often the case), and I’m wondering what the data says about effectiveness of TFA math teachers, I’d find this study useful. It wouldn’t fill me with irrational exuberance, just give me a helpful data point in my decision making process. Conversely, if I needed a fill for my special education classroom, I’d do a little research about TFA and SPED, and come to the conclusion TFA wouldn’t be a good fit for that spot.

LikeLike

Do we really expect big differences between different types of teachers – given that teachers only account for ~10% of test score differences.

LikeLike

Did Mathematica also take into consideration whether any of the TFA teachers in their sample had been through a teacher preparation program in university? I notice from your posting that they checked for correlations between math training, university selectivity, and in-service teacher preparation, but it would be important to note whether they had also vetted the sample for any pre-service teacher preparation at the university level. I know several current and former TFAers who graduated from traditional teacher preparation programs WITH their teaching license and went through TFA mostly for job placement and the ability to teach easily in another state. This also undermines the TFA model and the supposition that TFAers are effective because of “who they are” and not “what they know.” Maybe some are effective precisely because they DID have traditional teacher preparation, and nobody is talking about that? Or maybe they are just as effective with that same pre-service teacher education as they would have been in their first few years of teaching as a regularly contracted, non-TFA teacher. It’s an issue that I’ve not seen explored and about which I’m very curious.

LikeLike

Interesting question. One I didn’t see addressed in the study— that I can recall. We do know that while the students were randomly sampled, the teachers were not— which is another important critique. How exactly were these teacher chosen for inclusion?

LikeLike

Reblogged this on Crazy Crawfish's Blog and commented:

Dr Heilig’s initial critique of the TFA/Mathematica study. He makes talking about numbers fun(ish)

LikeLike

Crappy Research as a political weapon comes straight from the Nixon Administration and the tobacco industry play book. Nixon commissioned the Westinghouse Study in an effort to justify cutting the Head Start budget. He did not succeed due to the significant pushback from independent scientists and Democrats. The tobacco industry’s institutionalized manipulation of data is infamous. Duncan exemplifies the cunning and dubious connection with honesty of NIxon. He’s made the IES into the 21st century tobacco industry.

LikeLike

How many multiple choice questions are we talking about? How many more multiple choice questions did a TFA taught student get compared to the others supposedly? In other words, how many questions is 2.6 months of “extra learning?”

LikeLike

That is a great question that JerseyJazzman also asked. Mathematica did not include this in their 264 page report.

LikeLike

The poverty effects are the biggest for the english not the math tests. They even think it is the enrichment the wealthy kids get over the summer that gives them such a huge jump on poverty kids which despite of being a generally disproven measure, does show up in standardized tests. So even with mind numbing boring Drill Baby Drill for teaching to the test all year no one seems to be able to either help kids read better after a summer break or even help kids do “the circus act of performing that is the standardized tests,” after the gap of a summer. So by cherry picking math instead of English then it would be the only chance to find one obscure fluke. Have you heard of the theory of skirt length versus the economy? I think wealthy kids who can have a nice chateubriand after work and kick off their heels in their own place versus a share with three others could even have a whisper of an effect compared to a debt loaded state school student. A first year teacher in most of Florida make $8 an hour if you figure overtime per week. Can she afford the yoga lesson she craves to reduce stress?

LikeLike

Great stuff; glad you’re doing the heavy lifting. My quick take:

http://jerseyjazzman.blogspot.com/2013/09/on-new-tfa-study-people-calm-down.html

LikeLike

I watched a presentation by a psychometric specialist to the Orange County Florida school board. She never lied. Even though the overall scores fell and most scores fell, she would find that Howard Middle School fourth graders did better than the state average on their math score. She proceeded to report on the “glass half full” of whatever individual schools looked “good.” This is equivalent to looking at a line of a scattergraph and even though it is well within the error of the line deciding a point which is Howard Middle- that it shows it has an effect. Never mind if all the other grades were below the state average. Correlation is not causation. It is hard though to show without all the data from TFA that their staff did worse say for elementary school kids? We aren’t given the whole story. To have sliced off such a rare piece of meat I suspect all the other data will show they did worse. I am currently attacking the math of the Florida VAM. That is some Limburger Cheese of a stinky secret sauce. Advice for your next headline? If one wants a provocative headline how about “The skirt length effect: How preppy styles of short skirts help eastern elites get their male students and fashion conscience female students to score a whisper of a breath higher on bogus standardized tests.”

LikeLike

I hope that you will address this in the IES/mathematica webinar I saw advertised! Also the USED advertised it on their teach weekly email (they want TFA to seem useful SO badly). Keep up the awesome work!

Melissa Finkel

LikeLike