When I heard about the new National Academy for AI Instruction launched by the American Federation of Teachers, my first reaction was simple: finally. Finally, an effort that puts educators, not algorithms, at the center of how artificial intelligence enters our classrooms. For too long, education has been a testing ground for shiny new tools that arrive with bold promises but little regard for ethics or impact. This Academy offers something different: training that emphasizes not just how to use AI, but how to question it, reshape it, and make sure it works for students and not against them.

It’s a critical move at the right time. AI is being trained on our collective digital footprint—a footprint riddled with inequality, bias, and historical blind spots. That’s why it can’t be treated like just another gadget or app. Teachers must be equipped not only to teach with AI, but to teach against its flaws when necessary. The Academy’s focus on equity, safety, and pedagogy helps ensure that educators remain the moral center of this new digital era.

If AI is going to play a role in education, then educators must be the ones steering the ship. They cannot be left chasing after it. They must also demand that the journey be sustainable. Because AI’s current trajectory isn’t just reshaping pedagogy; it’s draining natural resources, consuming massive amounts of water and energy to power data centers that often operate without public accountability. If we don’t question not only what AI teaches but how it’s built, we risk outsourcing learning at the expense of both students and the planet.

A Future Filtered

In the not-so-distant future, artificial intelligence will be everywhere. It won’t just live on your phone or in your email inbox. It will be embedded in your city’s infrastructure, your healthcare, your education, your courts, your public records, your elections, and even your memories. Some of this AI will be invisible, humming beneath the surface. But some of it will walk among us. Literally. Imagine robots on sidewalks: some small enough to deliver your groceries, others towering ten feet tall, scanning your face, assessing your behavior, and making split-second decisions powered by opaque algorithms. We are not drifting slowly into this future; we are accelerating toward it. AI will predict your choices, write your child’s curriculum, determine your insurance rates, and decide who gets a loan—or who gets locked up. The machines won’t just answer your questions. They’ll shape the questions you think to ask.

Still, the key to using AI will not be how fast it thinks. It will be how well you can tell when it’s wrong. Not just “factually” wrong. Not just a name misspelled or a date off by a year. That’s the easy part. The real challenge will be spotting when AI is morally wrong, politically skewed, or stripped of nuance. It’s when truth has been sterilized to please a funder. When protest has been renamed “terror.” When empathy is missing, because there was no training data for it.

Will you notice? Because in the future we are rushing toward, it won’t be enough to “trust the system.” You’ll need to interrogate it constantly. The most dangerous lie AI tells is the one you want to believe. The one that confirms your biases, so you don’t question its source. The one that reflects a world that’s comfortable, but not true.

Tools Without Judgment

AI is not your friend. It’s not your enemy either—at least not yet. It’s a tool. But tools don’t have morals. A screwdriver doesn’t know when the screw is stripped. A compass doesn’t know if it’s pointing you into a minefield. And a searchlight can only illuminate where someone aims it. Nothing more. AI doesn’t know when its answers are unjust or even dangerous. Just ask Elon Musk’s Grok, which recently became a Nazi—or at least talks about it more often. AI doesn’t feel shame when it erases someone’s history. It doesn’t hesitate when it parrots hate speech or leaves out marginalized voices entirely. Its understanding of the world is entirely dependent on the data it was given, and in our world, that data is often biased, incomplete, or intentionally skewed.

So, what happens when someone like Elon Musk or another powerbroker decides to “fix” a system like Grok, OpenAI, Gemini, or whatever comes next—so it no longer tells inconvenient truths? What if it no longer acknowledges genocide, or climate collapse, or racist policing? What if the future’s most powerful minds decide the world’s biggest digital brain should only reflect their version of reality?

Will we notice? We are probably not heading toward a dystopia where AI lies outright. That would be easy to detect. No, we are heading toward something more insidious: a future of omission. A future where AI omits the struggle. Erases the resistance. Forgets the names of those hurt. A future where it gives you answers that feel right but are sanitized, scrubbed of discomfort, carefully engineered for obedience. We are entering a future of algorithmic amnesia.

The New Literacy

AI literacy won’t just mean knowing how to prompt an answer. It will mean knowing how to challenge it. How to verify it. How to sense when something is missing. When the voice sounds too neutral. Too polished. Too apolitical. Because neutrality can be a lie. In a world on fire, neutrality can be the algorithmic choice to watch the world burn and call it “balance.” So here’s what the new human skillset will require:

- Digital intuition: The ability to sense when a machine is hedging, dodging, or simplifying.

- Historical awareness: The knowledge to recognize when history is being revised or erased.

- Moral discernment: The courage to ask not just “Is this accurate?” but “Is this just?”

- Source skepticism: The refusal to trust without verification, even when the response sounds authoritative.

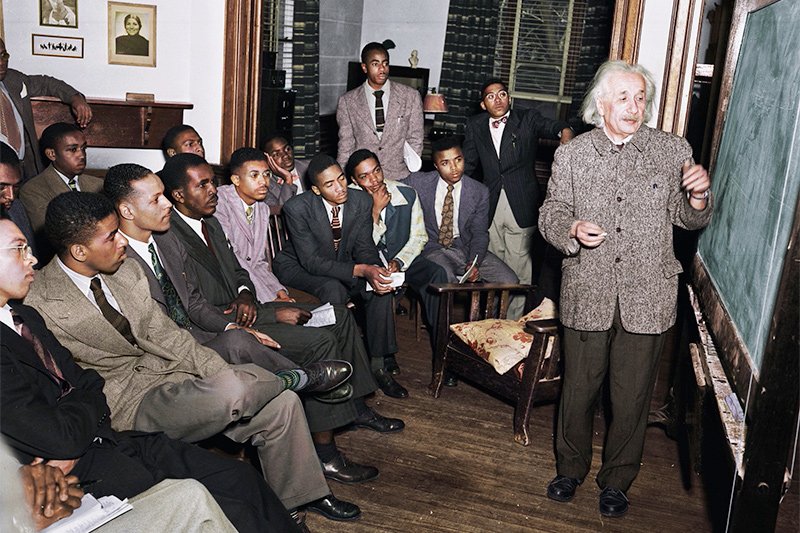

We’ve long been sold the myth that machines are objective. But we know better now. AI reflects the biases of the people and data that trained it. If a model learns from books, websites, and records that ignore the lives and languages of marginalized people, its responses will echo that erasure. Imagine your child’s AI-generated textbook never mentions Martin Luther King Jr. or Palestine. Imagine your AI-generated newsfeed slowly stops reporting protest. Imagine your job interviewer is an algorithm that’s been trained to prefer certain zip codes, certain accents, certain “faces of leadership.” Will you know it’s wrong? Or will it all feel so smooth, so fast, so convenient, that you never think to ask?

The Role of Educators and the Promise of the Academy

This is where the National Academy for AI Instruction comes in. Its goal to train hundreds of thousands of educators isn’t just about classroom technology. It’s about values. About reminding us that teachers are still the moral compass of learning. No chatbot knows which student skipped breakfast. No large language model understands when a child is being bullied for speaking another language.

And as someone who is a member of both the AAUP and AFT, I believe educator unions must play a central role in shaping—not just reacting to—these AI integrations. We need to ensure teachers have the support, training, and power to guide AI use based on community-centered ethics, not corporate convenience.

We also need to rethink curriculum. In an age when AI can write an essay in seconds, we must ask: what is education really for? David Brooks recently wrote that “thinking hard strengthens your mental capacity.” If school becomes a place where students outsource everything to machines, are we really helping them grow? Assignments must evolve—toward debate, public demonstration, service projects. Toward learning how to think, not just what to type.

We have a deepening disconnect between schooling and life. AI, if not handled with care, will widen that chasm. But if we act now, we can bridge it.

The Environmental Imperative

Finally, we can’t talk about the future of AI without addressing the planet. Generative AI systems require vast computing power, and that means massive energy and water use. In places like San Antonio, we’re already seeing environmental strains from data centers built to fuel AI. This is not sustainable.

With trillions of dollars flowing into this industry, addressing these impacts should not be a problem of resources. It’s a problem of will. Public policy must require these companies to be responsible stewards of the planet. The future of education cannot be built on the exploitation of natural resources. Sustainable AI must be part of any ethical AI vision.

We Are the Counterbalance

The answer to AI bias is not just better code. It’s better humans. Better questions. Better habits of mind. A deeper commitment to values that machines cannot comprehend. AI will always be a tool. Whether it becomes a scalpel or a weapon depends on who holds it, and who questions it.

Let’s say it plainly. The greatest threat isn’t artificial intelligence. It’s human indifference to the values that should guide it.

Leave a reply to AI Red Alert: Why Education Must Evolve – Cloaking Inequity Cancel reply