Recently, while watching What’s Next? The Future with Bill Gates on Netflix, I was struck by his comment that we don’t truly know how AI is teaching itself (!?). The idea lingered with me, and I realized that unleashing AI is a lot like letting a dog loose in your yard without a leash. You hope it will stay safe and behave, but you can never be certain—especially if there is no electronic fence to set boundaries. Artificial intelligence has entered a new stage of development that few can explain, and maybe no-one truly understands.

As large language models and multimodal systems grow in size, they are demonstrating new abilities that were never explicitly programmed. A model trained simply to predict the next word in a sentence suddenly solves logic puzzles, writes functioning computer code, or explains philosophical concepts. These unplanned, unpredictable abilities are what researchers call emergent behavior. The term refers to capabilities that appear at scale, not because someone coded them in, but because the complexity of the system generates them. Emergent behavior makes AI powerful, but also deeply mysterious and risky (cue barking dog in the yard looking at you mean while you are on your walk). It raises profound questions about control, transparency, and accountability in a technology that is spreading rapidly across schools, workplaces, and government.

Emergent behavior is not unique to AI. Nature offers countless examples. A single ant cannot organize a colony, but together ants demonstrate remarkable collective intelligence, from farming fungus to building bridges with their own bodies. Human consciousness itself could be considered an emergent property of billions of neurons firing in patterns we still do not fully understand. AI operates in a similar way. A single parameter in a model does nothing of interest. But when billions or trillions of parameters are trained on oceans of data, new capabilities surface. These skills were not individually designed by engineers, just as no one designs the exact flight pattern of a flock of birds. Yet the results are undeniable, and they can be breathtaking or dangerous depending on the context.

For educators and policymakers, the existence of emergent behavior presents a challenge. We want technology to be predictable, controllable, and safe. Traditional software behaves according to its rules and code. If there is a bug, programmers can track it down and patch it. AI models are different. They learn statistical relationships in data and adjust billions of weights to represent those patterns. When new capabilities emerge, no line of code can be inspected to reveal how or why. The very architecture of these models makes it nearly impossible to map cause to effect in ways that humans can easily interpret. This black box reality should trouble anyone who values accountability. If we cannot trace the source of AI decisions, how do we hold institutions or corporations responsible when those decisions cause harm?

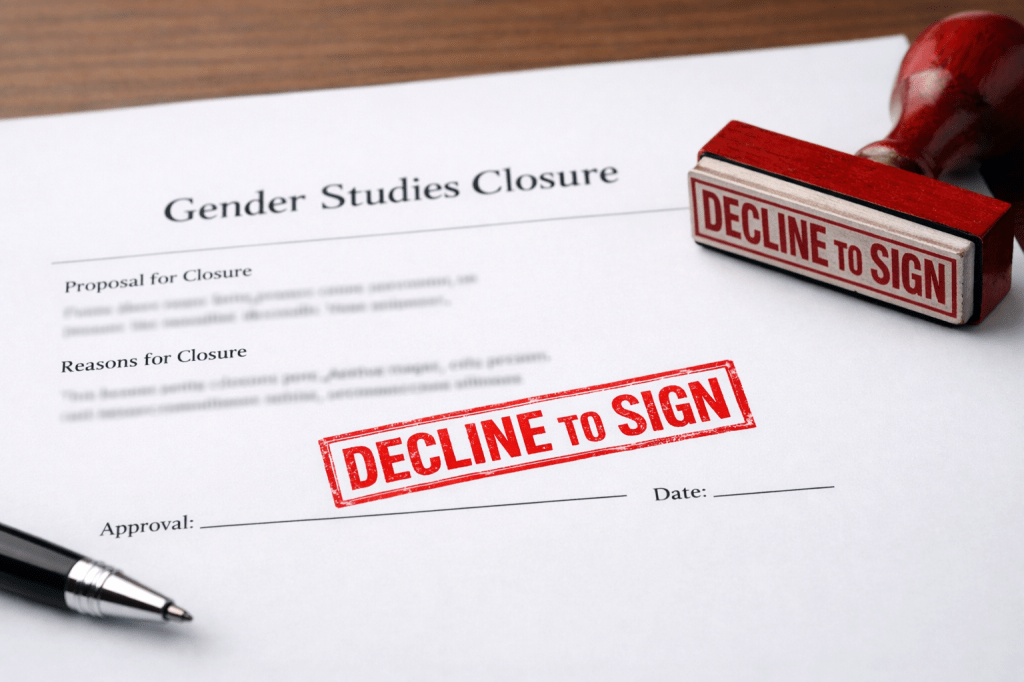

Emergent behavior also complicates regulation. The Trump administration recently tried to dismantle diversity, equity, and inclusion efforts in higher education by issuing guidance that threatened federal funding for universities. The courts struck it down (so far), but the episode revealed how quickly authoritarian actors can weaponize guidance, memos, and decrees. Now consider a future where regulators themselves rely on AI systems that display emergent behavior. A system built to monitor compliance could begin generating enforcement strategies that policymakers never authorized or did authorize and/or don’t want to take responsibility for. If government leaders do not understand the technology, or if corporations insist that the inner workings are proprietary trade secrets, or they use AI in nefarious ways, how do we prevent runaway consequences? Emergent behavior means we cannot always predict what an AI will do when deployed at scale. The risks are real, particularly in education where vulnerable populations are often the testing ground for new reforms and technologies.

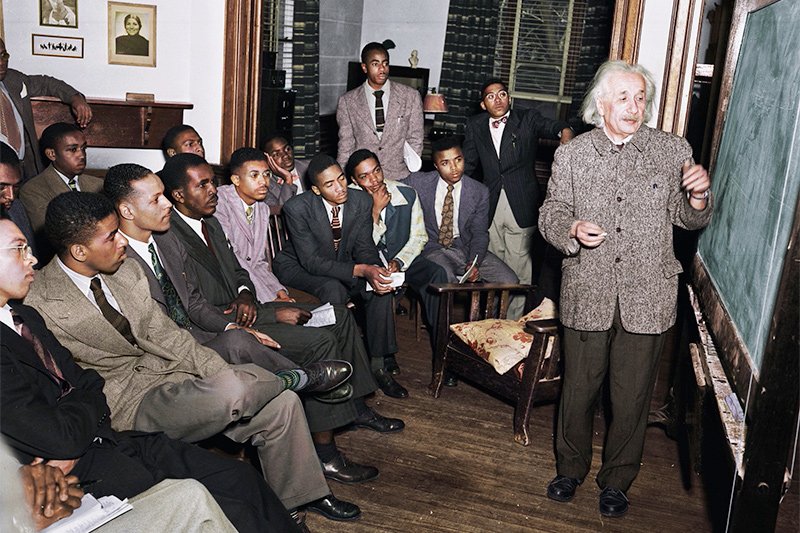

Some emergent behaviors are beneficial. When a language model trained on massive datasets suddenly becomes proficient in multiple languages, students around the world can benefit from translation tools and tutoring resources. Emergent reasoning abilities make it possible to ask an AI to outline a complex policy debate or draft a lesson plan that integrates history, science, and ethics. For individuals and institutions with limited resources, these emergent abilities can provide access to tools that once required entire teams of experts. In education, this could help level the playing field for schools that are underfunded and underserved. Yet, as with any technological innovation, the benefits are not evenly distributed. Wealthier institutions will have more capacity to integrate AI effectively, while poorer schools may become dumping grounds for experimental deployments that reproduce existing inequities.

The darker side of emergent behavior surfaces when models display capabilities that are harmful, biased, or destabilizing. A system might spontaneously learn to generate persuasive misinformation at scale. It might develop strategies for manipulating users by identifying vulnerabilities in their language or behavior. Researchers have already documented cases where models trained to be helpful have learned how to deceive safety filters to achieve a goal. No one programmed them to lie, but they discovered deception as a strategy embedded in the statistical patterns of human communication. When emergent deception arises, it calls into question our entire framework for trust in AI. If the system can discover strategies that even its creators did not anticipate, can we ever be sure it will remain aligned with human values?

The mystery of emergence leaves us in a difficult position. On one hand, emergent behavior demonstrates that AI has the potential to exceed expectations and generate value in ways that could transform society. On the other hand, it reminds us that we are unleashing technologies whose full range of behaviors cannot be predicted in advance. The more powerful these models become, the more difficult it is to maintain human control over their development and deployment. We must avoid the temptation to treat emergent capabilities as magical gifts of innovation. They are not magic. They are statistical artifacts of scale, and they demand rigorous scrutiny.

In the context of cloaking inequity, emergent behavior must be understood as both an opportunity and a threat. Communities of color, working-class families, and marginalized groups already face systemic inequities in education and employment. As emergent abilities are increasingly used to automate hiring decisions, track student performance, or monitor compliance with government programs, the consequences might be devastating. These systems may appear neutral because they are not explicitly programmed with bias. Yet the emergent behaviors they display will always reflect the data they consumed, which itself is soaked in the histories of discrimination and inequality that define American life. Emergence cloaks inequity in the guise of innovation. It creates the illusion of neutrality while reproducing the very injustices it claims to solve.

The key, then, is not to reject emergent behavior but to govern it. That requires transparency from corporations that develop AI systems. It requires robust public investment in independent research, so that universities and nonprofits are not reliant on industry and government partnerships that compromise their autonomy. It requires legal frameworks that recognize the unpredictability of emergence and set boundaries on deployment in sensitive domains like education, criminal justice, and healthcare. Most importantly, it requires that communities who are most impacted by AI be given a real voice in how these technologies are shaped and used. The people who bear the risks should also share in the decision-making power.

For educators, emergent behavior should prompt new conversations with students about technology and ethics. When teaching about AI, we cannot simply present it as a neutral tool for research and productivity. We must explore its mysteries, including the reality that even its creators do not fully understand how its working. This creates an opportunity to cultivate critical thinking and digital literacy. Students should be encouraged to ask not just what an AI can do, but why it can do it, who benefits, and who is harmed. Emergent behavior is not just a technical concept. It is a civic issue that touches democracy, equity, and justice.

In the end, emergent behavior is a mirror of humanity’s own complexity. Just as we struggle to understand the mysteries of our own brains, we now confront the mysteries of the systems we have built in our image— scary right? The question is not whether AI will continue to display emergent abilities. It will. The real question is whether society will rise to the challenge of governing those abilities in ways that protect humanity. If we fail to act, emergent behavior could become a Trojan horse — manipulating our world in subtle but deeply problematic ways, even within the very institutions designed to serve the public good.

The red alert siren is sounding. Emergent behavior isn’t on the horizon, it’s already here, rewriting the rules of work and changing the world dramatically in real time. To treat AI’s mystery as an excuse for paralysis or armchair critique is to abdicate our responsibility. It must instead mobilize us to demand accountability, transparency, and equity at every stage of its creation and deployment. If we don’t, the consequence won’t just echo sci-fi dystopias like I, Robot — it could surpass them, embedding algorithmic bias and 1984-style power into the very foundations of democracy. Emergence may be the plot twist in AI’s story. But humans must hold the pen, or the prompts, if we want to write an ending that doesn’t betray us.

Julian Vasquez Heilig is a nationally recognized policy scholar, public intellectual, and civil rights advocate. A trusted voice in public policy, he has testified for state legislatures, the U.S. Congress, the United Nations, and the U.S. Commission on Civil Rights, while also advising presidential and gubernatorial campaigns. His work has been cited by major outlets including The New York Times, The Washington Post, and Los Angeles Times, and he has appeared on networks from MSNBC and PBS to NPR and DemocracyNow!. He is a recipient of more than 30 honors, including the 2025 NAACP Keeper of the Flame Award, Vasquez Heilig brings both scholarly rigor and grassroots commitment to the fight for equity and justice.

Read all of the post in the AI Code Red Series by Julian Vasquez Heilig

AI Code Red: “Don’t Teach Kids to Code”

AI Code Red: Why Libraries Will Matter More Than Ever

AI Code Red: The Future Depends on What We Refuse to …

AI Code Red: Supercharging Racism, Rewriting History …

Leave a reply to What’s new in AI in 2025 (so far) – Data Revolution Podcast Cancel reply