Every semester, I hear some version of the same question from students, parents, or policymakers: “Why should I have to take biology if I’m not going to be a doctor?” or “Why do students need electives in college that have nothing to do with their major?” On the surface, these seem like practical questions. College is expensive. Time is limited in high school. Why not focus on the courses that directly lead to a job? But beneath those questions lies a deeper misunderstanding of what education is, and what kind of society it’s meant to sustain.

We live in an era obsessed with specialization. Students are encouraged to choose a major early, stack credentials quickly, and enter the workforce as efficiently as possible. Legislators tout degrees that “align with industry needs.” University marketing teams highlight job placement rates as if education were a manufacturing process. Somewhere in all this, we began to lose sight of what higher education was designed to do: not only to prepare people to work, but to prepare them to think.

That capacity to think independently is becoming even more essential in an age of artificial intelligence. AI will automate many technical tasks that once defined professional expertise, but it cannot replace the creativity, empathy, or moral reasoning that define human intelligence. Critical thinking, interdisciplinary understanding, and ethical judgment are what will keep people relevant. At the same time, misinformation about science, politics, and history spreads faster than ever. People must be able to recognize credible evidence, understand complex explanations, and resist the lure of conspiracy. An educated society is not simply one that can build new technologies—it is one that can question how and why those technologies are used.

The Purpose of Biology Class Isn’t Just Biology

Let’s start with that biology question. No, not every student who takes Biology 101 will become a biologist. That’s not the point. You take biology so you can make sense of the world you live in, a world that runs on DNA, bacteria, climate systems, and pandemics. When COVID-19 hit, the world suddenly divided between people who understood the basics of viral transmission and those who did not. People who had taken, and absorbed, introductory biology courses had a framework to interpret information about vaccines, mRNA, or herd immunity. They could discern fact from fiction because they’d already learned the scientific process: hypothesis, evidence, replication, peer review.

Biology doesn’t just teach you about cells or species; it teaches you how we know what we know. That matters in a democracy where public health depends on informed consent and civic participation. You take biology so you can read the news critically, vote responsibly on science-related issues, and recognize the difference between expertise and opinion. A well-educated citizenry needs that foundation. Without it, misinformation flourishes. And when obvious misinformation drives public policy, lives are at stake.

The Case for Electives

Then there’s the question of electives: Why should I have to take a philosophy class, or literature, or political science, or history, if I’m majoring in computer science or accounting? Because you’re not just training for a career—you’re training for citizenship, leadership, and life in a rapid evolving technological society. Electives expose you to perspectives beyond your own. They introduce you to ideas you may not agree with, histories you’ve never heard, cultures you’ve never encountered, and ethical dilemmas you might one day face.

A student once said, “I don’t see why I need to take a sociology course. I’m majoring in business.” She was asked what she thought business was about. She said, “It’s about making money.” The instructor smiled and said, “No—it’s about people. People who buy, sell, work, and lead. If you don’t understand people, you’ll never understand business.” That’s what electives do. They widen the lens. They challenge you to connect dots between disciplines, to think across boundaries, to see how technology, economics, history, and culture shape one another. That’s not fluff, it’s the foundation of innovation. The most creative breakthroughs in science and technology have come from people who could think metaphorically, draw analogies, and recognize patterns beyond their field.

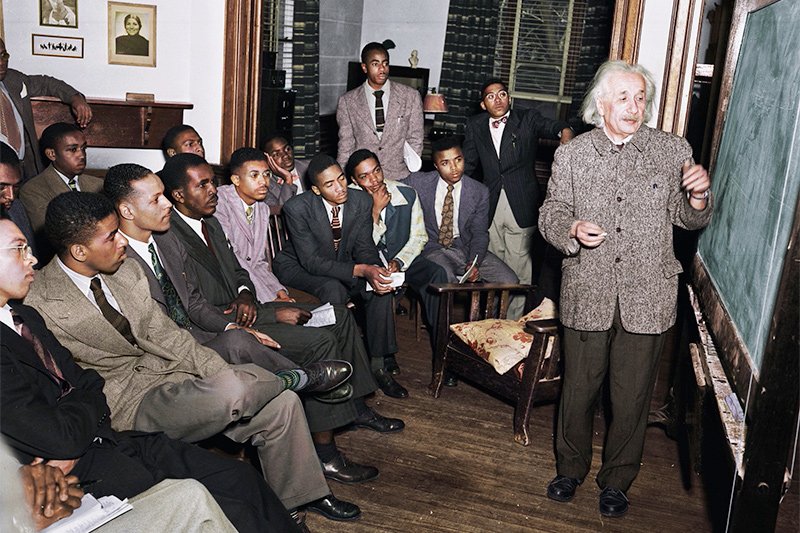

Steve Jobs, who famously dropped out of Reed College, still credited an elective calligraphy course for inspiring the design of Apple’s typography and visual interface which led to the fonts we have on computers today. Einstein played violin to relax his mind and often said that creativity in physics came from imagination, not formulas. The most practical minds are often the most curious and well-rounded.

The Problem With “Job-Ready” Education

Policymakers love the phrase “job-ready.” It’s politically convenient and economically reassuring. But the world doesn’t stand still long enough for anyone to stay “ready” for a single job. Entire industries rise and fall within a decade. The jobs many of today’s college freshmen will hold in 2040 don’t even exist yet. I remember being invited to a dinner with mayors from the Dallas area where the focus wasn’t on how to make students more “college-ready,” but on how to make them instantly employable. The mayor and local business leaders wanted high schools to produce students who could walk directly into manufacturing jobs so companies wouldn’t have to invest in training. Their goal was clear: shift the cost of workforce development onto the public education system. But the skills they wanted schools to teach were narrow and short-lived. When those factories closed or relocated, many workers discovered that their specialized training didn’t translate to the next job. What had once been a ticket to employment became a trap, leaving families and communities struggling to adapt to an economy that had already moved on.

That conversation in Dallas revealed something deeper about how we talk about education and work. When we reduce learning to the immediate demands of an employer, we risk building an economy of dependency rather than empowerment. True readiness is not about mastering a single skill set; it is about cultivating curiosity, adaptability, and the ability to learn in new and unpredictable contexts. The most valuable workers in the coming decades will be those who can think critically, solve complex problems, and reinvent themselves as the world changes. Preparing students for work without preparing them for change is not workforce development, it is short-term thinking disguised as progress. Education that endures must teach people not only how to make a living, but how to keep learning long after their first job disappears.

Technical proficiency is essential, but it’s not sufficient. A software engineer who can’t communicate with a team is less valuable than one who can. The same is true across every field: collaboration, judgment, and empathy are what make technical skills meaningful. This is especially true now that artificial intelligence is beginning to replace many of the very people who once built it. Companies are discovering that advanced AI systems can write code, troubleshoot errors, and even optimize design processes faster and cheaper than large teams of engineers. The result is a paradox—AI is creating new opportunities while simultaneously reducing the need for human labor in some of the most coveted technical professions.

That shift underscores why human intelligence—our ability to reason, question, and connect across disciplines—matters more than ever. AI can generate solutions, but it cannot understand values, ethics, or the broader social consequences of those solutions. It cannot mentor a colleague, mediate a conflict, or imagine a better way forward when the data points in different directions. The people who will thrive in the future are not those who simply know how to use the newest tools, but those who understand when, why, and whether those tools should be used. Education that privileges adaptability, reflection, and communication is not a luxury; it is the foundation for surviving—and shaping—the next wave of technological change. A narrowly trained workforce can build systems, but only a broadly educated citizenry can build a democracy.

Education as a Public Good

The attack on the liberal arts—philosophy, history, literature, and the sciences not tied directly to profit—didn’t come out of nowhere. It reflects a larger cultural shift toward treating education as a private investment rather than a public good. If college is just a transaction, e.g. pay tuition, get a credential, earn a salary, then of course people will question the value of courses that don’t seem to have a direct payoff. But if we see education as a civic foundation, the question changes: What kind of society are we building when we decide what’s “worth learning”?

Do we want citizens who can analyze data but not discern truth? Who can code a program but can’t recognize propaganda? Who can design a bridge but can’t recognize injustice? The ancient Greeks called it paideia—the cultivation of mind, body, and spirit necessary for self-governance. Thomas Jefferson called it the “cradle of democracy.” W.E.B. Du Bois argued that education must not simply teach work, but life. When we narrow education to career training, we shrink the human spirit and weaken the civic fabric. A well-rounded education isn’t an indulgence—it’s an insurance policy against ignorance. It gives you tools to engage in complex debates, from climate change to criminal justice reform, from artificial intelligence to free speech. It gives you empathy, context, and critical thinking.

When you study biology, you learn how systems interact. When you study history, you learn how ideas evolve. When you study literature, you learn how language shapes reality. When you study economics, you learn how incentives shape behavior. When you study philosophy, you learn how to think about thinking. Together, these disciplines build a kind of intellectual resilience that no single major can provide. A student who only learns what’s “useful” for their career may succeed for a few years. A student who learns how to learn—who develops curiosity, adaptability, and moral clarity—can thrive for a lifetime.

Conclusion: Beyond the Transcript

Higher education’s greatest outcomes aren’t always on the transcript. They’re in the capacity to hold two ideas at once without panic. To disagree without dehumanizing. To see the complexity in a headline and ask better questions before reaching for easy answers. That’s what electives do. That’s what general education does. That’s what a biology course does for a non-scientist. They remind us that being educated isn’t about memorizing content—it’s about learning how to live intelligently in a complicated world.

When people ask, “Why should I take biology?” or “Why do I need electives?” I answer: because democracy needs you to. A democracy survives only when its citizens can tell the difference between evidence and emotion, between expertise and conspiracy, between science and superstition. You take biology so you can understand public health debates. You take history so you can recognize when we’re repeating it. You take art so you can imagine alternatives. You take philosophy so you can think clearly when the world gets noisy.

So maybe the real question isn’t “Why should I take biology?” but “What happens to a society when people stop asking questions?” That’s the danger of reducing education to job training: it produces skilled workers, not thoughtful citizens. And without thoughtful citizens, democracy itself begins to fail. A college degree should be more than a ticket to employment, it should be a gateway to understanding. A well-rounded education is the cornerstone of a well-functioning republic. We need engineers who read history, artists who understand data, teachers who know science, and citizens who can think for themselves. Because in the end, an economy runs on skills. But a democracy runs on wisdom.

Julian Vasquez Heilig is an award-winning civil rights leader, scholar, and public intellectual whose career has spanned two decades in higher education, including service as Provost at Western Michigan University, Dean at the University of Kentucky, and faculty leadership roles at the University of Texas at Austin and California State University, Sacramento. A national voice on education policy and social justice, he has testified before legislatures, advised political campaigns, and keynoted across the world. As an undergraduate, his favorite elective was film, which opened his eyes to how storytelling, psychology, and culture intersect—while calculus nearly drove him crazy. That contrast taught him that education should ignite curiosity, not conformity. He shares that vision through his Without Fear or Favor newsletter on LinkedIn, read by more than 1.5 million people in 2025, and as founding editor of the public scholarship blog Cloaking Inequity.

Leave a comment